2020-09-05

Testing in Django. Automated testing is an extremely useful bug-killing tool for the modern Web developer. You can use a collection of tests – a test suite – to solve, or avoid, a number of problems: When you’re writing new code, you can use tests to validate your code works as expected. When you’re refactoring or modifying old code.

- Django test runner not finding tests. Ask Question Asked 11 years, 8 months ago. Active 18 days ago. Viewed 45k times 87 18. I am new to both Python and Django and I.

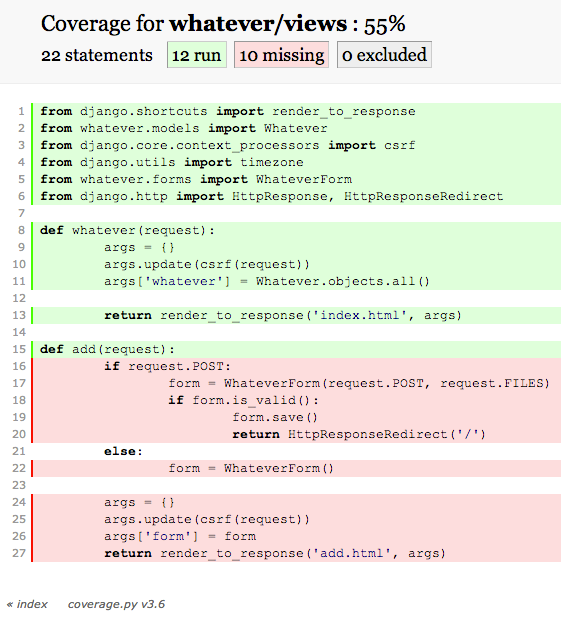

- Django-nose provides all the goodness of nose in your Django tests, like: Testing just your apps by default, not all the standard ones that happen to be in INSTALLEDAPPS. Running the tests in one or more specific modules (or apps, or classes, or folders, or just running a specific test) Obviating the need to import all your tests.

This is a blog post version of the talk I gave at DjangoCon Australia 2020 today.The video is on YouTube and the slides are on GitHub (including full example code).

You run your tests with manage.py test.You know what happens inside your tests, since you write them.But how does the test runner work to execute them, and put the dots, E’s, and F’s on your screen?

When you learn how Django middleware works, you unlock a huge number of use cases, such as changing cookies, setting global headers, and logging requests.Similarly, learning how your tests run will help you customize the process, for example loading tests in a different order, configuring test settings without a separate file, or blocking outgoing HTTP requests.

In this post, we’ll make a vital customization of our test run’s output - we’ll swap the “dots and letters” default style to use emojis to represent test success, failure, etc:

But before we can write that, we need to deconstruct the testing process.

Test Output¶

Let’s investigate the output from a test run.We’ll use a project containing only this vacuous test:

When we run the tests, we get some familiar output:

To investigate what’s going on, we can ask for more detail by increasing the verbosity to maximum with -v 3:

Okay great, that’s plenty!Let’s take it apart.

The first line, “Creating test database…”, is Django reporting the creation of a test database.If your project uses multiple databases, you’ll see one line per database.

I’m using SQLite in this example project, so Django has automatically set mode=memory in the database address.This makes database operations about ten times faster.Other databases like PostgreSQL don’t have such modes, but there are other techniques to run them in-memory.

The second “Operations to perform” line and several following lines are the output of the migrate command on our test databases.This output is identical to what we’d see running manage.py migrate on an empty database.Here I’m using a small project with no migrations - on a typical project you’d see one line for each migration.

After that, we have the line saying “System check identified no issues”.This output is from Django’s system check framework, which runs a number of “preflight checks” to ensure your project is well configured.You can run it alone with manage.py check, and it also runs automatically in most management commands.Typically it’s the first step before a management command, but for tests it’s deferred until after the test databases are ready, since some checks use database connections.

You can write your own checks to detect configuration bugs.Because they run first, they’re sometimes a better fit than writing a test.I’d love to go into more detail, but that would be another post’s worth of content.

The following lines cover our tests.By default the test runner only prints a single character per test, but with a higher verbosity Django prints a whole line per test.Here we only have one test, “test_one”, and as it finished running, the test runner appended its status “ok” to the line.

To signify the end of the run, there’s a divider made with many dashes: “-----”.If we had any failures or errors, their stack traces would appear before this divider.This is followed by a summary of the tests that ran, their total runtime, and “OK” to indicate the test run was successful.

The final line reports the destruction of our test database.

This gives us a rough order of steps in a test run:

- Create the test databases.

- Migrate the databases.

- Run the system checks.

- Run the tests.

- Report on the test count and success/failure.

- Destroy the test databases.

Let’s track down which components inside Django are responsible for these steps.

Django and unittest¶

As you may be aware, Django’s test framework extends the unittest framework from the Python standard library.Every component responsible for the above steps is either built in to unittest, or one of Django’s extensions.We can represent this with a basic diagram:

We can find the components on each side by tracing through the code.

The “test” Management Command¶

The first place to look is the test management command, which Django finds and executes when we run manage.py test.This lives in django.core.management.commands.test.

As management commands go, it’s quite short - under 100 lines.Its handle() method is mostly concerned with handing off to a a “Test Runner”.Simplifying it down to three key lines:

(Full source.)

So what’s this TestRunner class?It’s a Django component that coordinates the test process.It’s customizable, but the default class, and the only one in Django itself, is django.test.runner.DiscoverRunner.Let’s look at that next!

The DiscoverRunner Class¶

DiscoverRunner is the main coordinator of the test process.It handles adding extra arguments to the management command, creating and handing off to sub-components, and doing some environment setup.

It starts like this:

(Documentation, Full source.)

These class level attributes point to other classes that perform different steps in the test process.You can see most of them are unittest components.

Note that one of them is called test_runner, so we have two distinct concepts called “test runner” - Django’s DiscoverRunner and unittest’s TextTestRunner.DiscoverRunner does a lot more than TextTestRunner and has a different interface.Perhaps Django could have used a different name for DiscoverRunner, like TestCoordinator, but it’s probably too late to change that now.

The main flow in DiscoverRunner is in its run_tests() method.Stripping out a bunch of details, run_tests() looks something like this:

That’s quite a few steps!Many of the called methods correspond with steps on our above list:

setup_databases()creates of the test databases.This only creates the databases necessary for the selected tests, as filtered byget_databases(), so if you run only database-freeSimpleTestCases, Django doesn’t create any databases.Inside it both creates the databases and runs themigratecommand.run_checks(), well, runs the checks.run_suite()runs the test suite, including all the output.teardown_databases()destroys the test databases.

A couple of other methods are things we may have expected:

setup_test_environment()andteardown_test_environment()set and unset some settings, such as the local email backend.suite_result()returns the number of failures to thetestmanagement command.

All these methods are useful to investigate for customizing those parts of the test process.But they’re all part of Django itself.The other methods hand off to components in unittest - build_suite() and run_suite().Let’s investigate those in turn.

build_suite()¶

build_suite() is concerned with finding the tests to run, and putting them into a “suite” object.It’s again a long method, but simplified, it looks something like this:

This method uses three of the four classes that we saw DiscoverRunner refers to:

test_suite- a unittest component that acts as a container for tests to run.parallel_test_suite- a wrapper around a test suite used with Django’s parallel testing feature.test_loader- a unittest component that can find test modules on disk, and load them into a suite.

Django Run

run_suite()¶

The other DiscoverRunner method to look into is run_suite().We don’t need to simplify this one - its entire implementation looks like this:

Its only concern is constructing a test runner and telling it to run the constructed test suite.This the final one of the unittest components referred to by a class attribute.It uses unittest.TextTestRunner, which is the default runner for outputting results as text, as opposed to, for example, an XML file to communicate results to your CI system.

Let’s finish our investigation by looking inside that class.

The TextTestRunner Class¶

This component inside unittest takes a test case or suite, and executes it.It starts:

(Full source.)

Similarly to DiscoverRunner, it uses a class-level attribute to refer to another class.The default TextTestResult class is the thing that actually writes the text-based output.Unlike DiscoverRunner’s class references, we can override resultclass by passing an alternative to TextTestRunner.__init__().

We’re now ready to customize the test process.But first, let’s review our investigation.

A Map¶

We can now expand our map to show the classes we’ve found:

There’s certainly more detail we could add, such as the contents of several important methods in DiscoverRunner.But what we have is by far enough to implement many useful customizations.

How to Customize¶

Django offers us two ways to customize the test running process:

- Override the test command with a custom subclass.

- Override the

DiscoverRunnerclass by pointing theTEST_RUNNERsetting at a custom subclass.

Because the test command is so simple, most of the time we’ll customize by overriding DiscoverRunner.Since DiscoverRunner refers to the unittest components via class-level attributes, we can replace them by redefining the attributes in our custom subclass.

Super Fast Test Runner¶

For a basic example, imagine we want to skip all our tests and report success every time.We can do this by creating a DiscoverRunner subclass with a new run_tests() method that doesn’t call its super() method:

Then we use it in our settings file like so:

When we run manage.py test now, it completes in record time!

Great, that is very useful.

Let’s finally get on to our much more practical emoji example.

Emoji-Based Output¶

From our investigation we found that the unittest component TextTestResult is responsible for performing the output.We can replace it in DiscoverRunner, by having it pass a value for resultclass to TextTestRunner.

Django already has some options to swap resultclass, for example the --debug-sql option which prints executed queries for failing tests.

DiscoverRunner.run_suite() constructs TextTestRunner with arguments from the DiscoverRunner.get_test_runner_kwargs() method:

This in turn calls get_resultclass(), which returns a different class if one of two test command options (--debug-sql or --pdb) have been used:

If neither option is set, this method implicitly returns None, telling TextTestResult to use the default resultclass.We can detect this None in our custom subclass and replace it with our own TextTestResult subclass:

Our EmojiTestResult class extends TextTestResult and replaces the default “dots” output with emoji.It ends up being quite long since it has one method for each type of test result:

After pointing the TEST_RUNNER setting at EmojiTestRunner, we can run tests and see our emoji:

Yay! 👍

No Composition¶

After our spelunking, we’ve seen the unittest design is relatively straightforward.We can swap classes for subclasses to change any behaviour in the test process.

This works for some project-specific customizations, but it’s not very easy to combine others’ customizations.This is because the design uses inheritance and not composition. We have to use multiple inheritance across the web of classes to combine customizations, and whether this works depends very much on the implementation of the customizations.Because of this not really a plugin ecosystem for unittest.

I know of only two libraries providing custom DiscoverRunner subclasses:

- unittest-xml-reporting - provides XML output for your CI system to read.

- django-slow-tests - provides measurement of test execution time, to find your slowest tests.

I haven’t tried, but combining them may not work, since they both override parts of the output process.

In contrast, pytest has a flourishing ecosystem with over 700 plugins.This is because its design uses composition with hooks, which act similar to Django signal.Plugins register for only the hooks they need, and pytest calls every registered hook function at the corresponding point of the test processes.Many of pytest’s built-in features are even implemented as plugins.

If you’re interested in heavier customization of your test process, do check out pytest.

Fin¶

Thank you for joining me on this tour.I hope you’ve learned something about how Django runs your tests, and can make your own customizations,

—Adam

🎉 My book Speed Up Your Django Tests is now up to date for Django 3.2. 🎉

Buy now on Gumroad

One summary email a week, no spam, I pinky promise.

Related posts:

Tags:django

© 2020 All rights reserved.

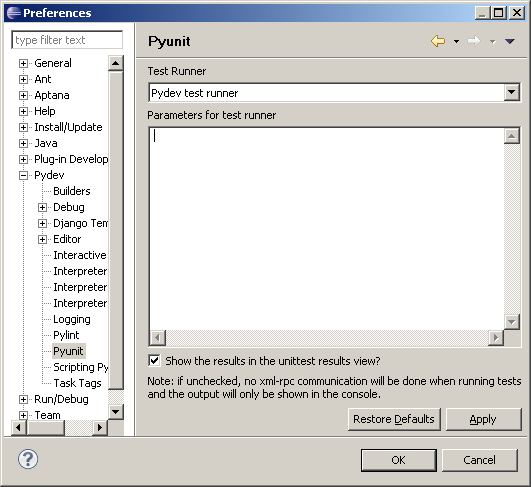

Use this dialog to create a run/debug configuration for Django tests.

Configuration tab

| Item | Description | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Target | Specify the target to be executed. If the field is left empty, it means that all the tests in all the applications specified in

Same rules apply to the doctests contained in the test targets. The test label is used as the path to the test method or class to be executed. If there is function with a doctest, or a class with a class-level doctest, you can invoke that test by appending the name of the test method or class to the label. | ||||||||

| Custom settings | If this checkbox is selected, Django test will run with the specified custom settings, rather than with the default ones. Specify the fully qualified name of the file that contains Django settings. You can either type it manually, in the text field to the right, or click the browse button, and select one in the dialog that opens. If this checkbox is not selected, Django test will run with the default settings, defined in the Settings field of the Django page. The text field is disabled. | ||||||||

| Options | If this checkbox is selected, it is possible to specify parameters to be passed to the Django tests. Type the list of parameters in the text field to the right, prepending parameters with '--' and using spaces as delimiters. For example: If this checkbox is not selected, the text field is disabled. | ||||||||

| Environment | |||||||||

| Project | Click this list to select one of the projects, opened in the same PyCharm window, where this run/debug configuration should be used. If there is only one open project, this field is not displayed. | ||||||||

| Environment variables | This field shows the list of environment variables. If the list contains several variables, they are delimited with semicolons. To fill in the list, click the browse button, or press Shift+Enter and specify the desired set of environment variables in the Environment Variables dialog. To create a new variable, click , and type the desired name and value. You might want to populate the list with the variables stored as a series of records in a text file, for example: Variable1 = Value1 Variable2 = Value2 Just copy the list of variables from the text file and click Paste () in the Environmental Variables dialog. The variables will be added to the table. Click Ok to complete the task. At any time, you can select all variables in the Environment Variables dialog, click Copy , and paste them into a text file. | ||||||||

| Python Interpreter | Select one of the pre-configured Python interpreters from the list. When PyCharm stops supporting any of the outdated Python versions, the corresponding Python interpreter is marked as unsupported. | ||||||||

| Interpreter options | In this field, specify the command-line options to be passed to the interpreter. If necessary, click , and type the string in the editor. | ||||||||

| Working directory | Specify a directory to be used by the running task.

| ||||||||

| Add content roots to PYTHONPATH | Select this checkbox to add all content roots of your project to the environment variable PYTHONPATH; | ||||||||

| Add source roots to PYTHONPATH | Select this checkbox to add all source roots of your project to the environment variable PYTHONPATH; | ||||||||

| Docker container settings This field only appears when a Docker-based remote interpreter is selected for a project.. Click to open the dialog and specify the following settings: | |||||||||

| Options |

Click to expand the tables. Click , , or to make up the lists. | ||||||||

| Docker Compose This field only appears when a Docker Compose-based remote interpreter is selected. | |||||||||

| Commands and options | You can use the following commands of the Docker Compose Command-Line Interface:

| ||||||||

| Command Preview | You can expand this field to preview the complete command string. Example: if you enter the following combination in the Commands and options field: up --build exec --user jetbrainsthe preview output should looks as follows: docker-compose -f C:PyCharm-2019.2Demosdjangodocker-masterdocker-compose.yml -f <override configuration file> up --build exec --user jetbrains | ||||||||

Logs tab

Use this tab to specify which log files generated while running or debugging should be displayed in the console, that is, on the dedicated tabs of the Run or Debug tool window.

| Item | Description |

|---|---|

| Is Active | Select checkboxes in this column to have the log entries displayed in the corresponding tabs in the Run tool window or Debug tool window. |

| Log File Entry | The read-only fields in this column list the log files to show. The list can contain:

|

| Skip Content | Select this checkbox to have the previous content of the selected log skipped. |

| Save console output to file | Select this checkbox to save the console output to the specified location. Type the path manually, or click the browse button and point to the desired location in the dialog that opens. |

| Show console when a message is printed to standard output stream | Select this checkbox to activate the output console and bring it forward if an associated process writes to Standard.out. |

| Show console when a message is printed to standard error stream | Select this checkbox to activate the output console and bring it forward if an associated process writes to Standard.err. |

| Click this button to open the Edit Log Files Aliases dialog where you can select a new log entry and specify an alias for it. | |

| Click this button to edit the properties of the selected log file entry in the Edit Log Files Aliases dialog. | |

| Click this button to remove the selected log entry from the list. | |

| Click this button to edit the select log file entry. The button is available only when an entry is selected. |

Common settings

When you edit a run configuration (but not a run configuration template), you can specify the following options:

Item | Description |

|---|---|

| Name | Specify a name for the run/debug configuration to quickly identify it when editing or running the configuration, for example, from the Run popup Alt+Shift+F10. |

| Allow parallel run | Select to allow running multiple instances of this run configuration in parallel. By default, it is disabled, and when you start this configuration while another instance is still running, PyCharm suggests to stop the running instance and start another one. This is helpful when a run/debug configuration consumes a lot of resources and there is no good reason to run multiple instances. |

| Store as project file | Save the file with the run configuration settings to share it with other team members. The default location is .idea/runConfigurations. However, if you do not want to share the .idea directory, you can save the configuration to any other directory within the project. By default, it is disabled, and PyCharm stores run configuration settings in .idea/workspace.xml. |

Toolbar

The tree view of run/debug configurations has a toolbar that helps you manage configurations available in your project as well as adjust default configurations templates.

| Item | Shortcut | Description |

|---|---|---|

| Alt+Insert | Create a run/debug configuration. | |

| Alt+Delete | Delete the selected run/debug configuration. Note that you cannot delete default configurations. | |

| Ctrl+D | Create a copy of the selected run/debug configuration. Note that you create copies of default configurations. | |

| The button is displayed only when you select a temporary configuration. Click this button to save a temporary configuration as permanent. | ||

Move into new folder / Create new folder. You can group run/debug configurations by placing them into folders. To create a folder, select the configurations within a category, click , and specify the folder name. If only a category is in focus, an empty folder is created. Then, to move a configuration into a folder, between the folders or out of a folder, use drag or and buttons. To remove grouping, select a folder and click . | ||

| Click this button to sort configurations in the alphabetical order. |

Before launch

In this area, you can specify tasks to be performed before starting the selected run/debug configuration. The tasks are performed in the order they appear in the list.

Pycharm Django Test Runner

| Item | Shortcut | Description |

|---|---|---|

| Alt+Insert | Click this icon to add one of the following available tasks:

| |

| Alt+Delete | Click this icon to remove the selected task from the list. | |

| Enter | Click this icon to edit the selected task. Make the necessary changes in the dialog that opens. | |

| / | Alt+Up/ Alt+Down | Click these icons to move the selected task one line up or down in the list. The tasks are performed in the order that they appear in the list. |

| Show this page | Select this checkbox to show the run/debug configuration settings prior to actually starting the run/debug configuration. | |

| Activate tool window | By default this checkbox is selected and the Run or the Debug tool window opens when you start the run/debug configuration. Otherwise, if the checkbox is cleared, the tool window is hidden. However, when the configuration is running, you can open the corresponding tool window for it yourself by pressing Alt+4 or Alt+5. |